From: Poland

Location: Białystok

On Useme since 9 December 2017

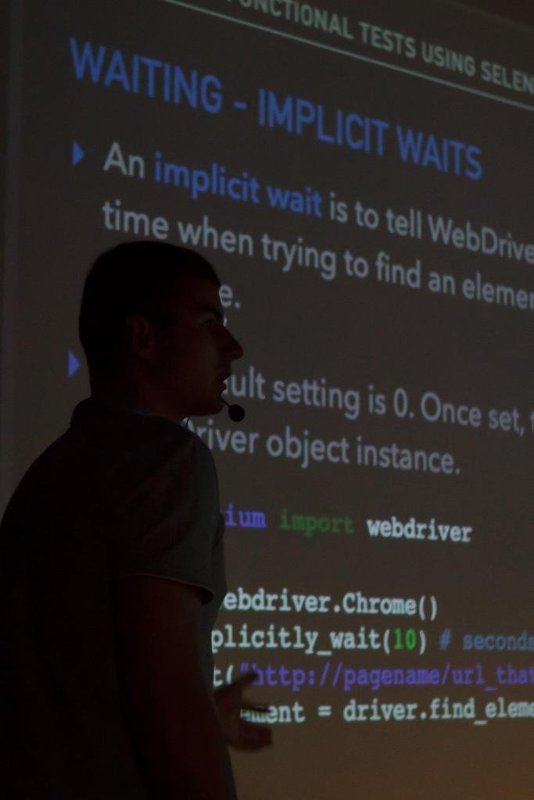

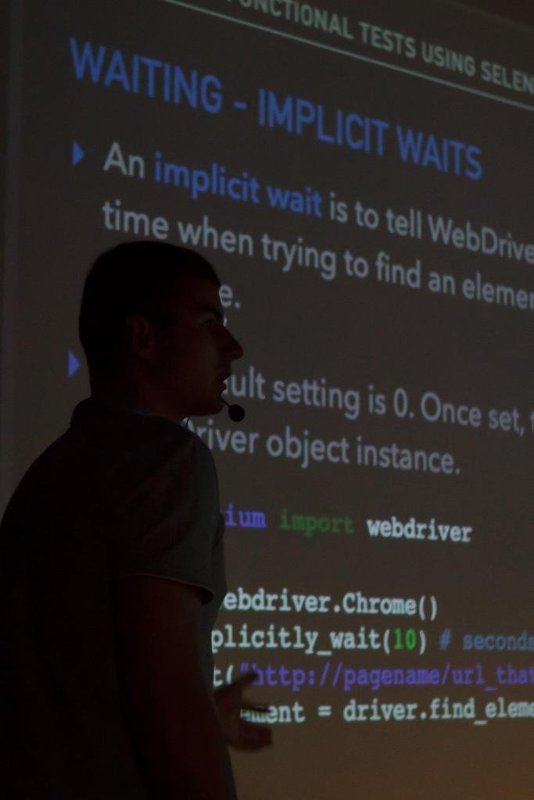

Good knowledge and usage of technologies: - Backend: Python, Django, 3rd party libraries of Python and Django, DRF - Databases: MySQL, PostgreSQL, MongoDB - Deployment: virtualenv, pip, Bash, Nginx, uWSGI, Gunicorn, supervisor - Testing: Selenium, pytest, unittest - NLP: NLTK

Good knowledge and usage of tools to support work on the projects: - Repository: git, git flow - Management: BitBucket, GitHub, Google Docs, LaTeX, Jira, Google Calendar - Communication: IRC, Slack, Stack overflow - Celery, RabbitMQ, Redis - Docker - Rancher

Operating Systems: - GNU / Linux - Mac OS X

In addition, during my years at University I was interested and acquired knowledge of: - OpenCV (computer system that identifies people based on facial features, written in Python using Numpy and Haarcascades classifier)

- PySpark (server log analysis from the studio, writing and running a script in Python that for a given user count: total times its operations on each unique server (host), the percentage of time all operations on each of the hosts)

- Google App Engine (application using at least 3 services offered by the platform, including database service that stores at least two types of entities related in a "one to many", written in Python, based on the MVT pattern)

- MasterWorker (writing a distributed application in Python, running in Condor environment, using a library Master Worker, which could find the "lost" password - you have given the hash generated by the MD5 algorithm)

Additional activities: - Participant at PyStok (meetings of Bialystok’s Python community) - long-distance running (half marathons, marathons) - Instructor of sports (swimming and karate) - Teacher / lifeguard at youth camps (travel agency Funclub)

EDIOM, LLC

EDIOM, LLC

Projects: - Universal PubMed Crawler Crawling scientific publications in order to find as many freely available scientific articles as it's possible. Python project based on asynchronous tasks (Celery), web architecture (Django framework) and...

Projects: - Universal PubMed Crawler Crawling scientific publications in order to find as many freely available scientific articles as it's possible. Python project based on asynchronous tasks (Celery), web architecture (Django framework) and...